hello friends! new(ish)!

Home Server/Setting up your Storage: Difference between revisions

>Comfyanon No edit summary |

>Anonsauce m (added those 2 categories :)) |

||

| (9 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

== | == Basic Concepts == | ||

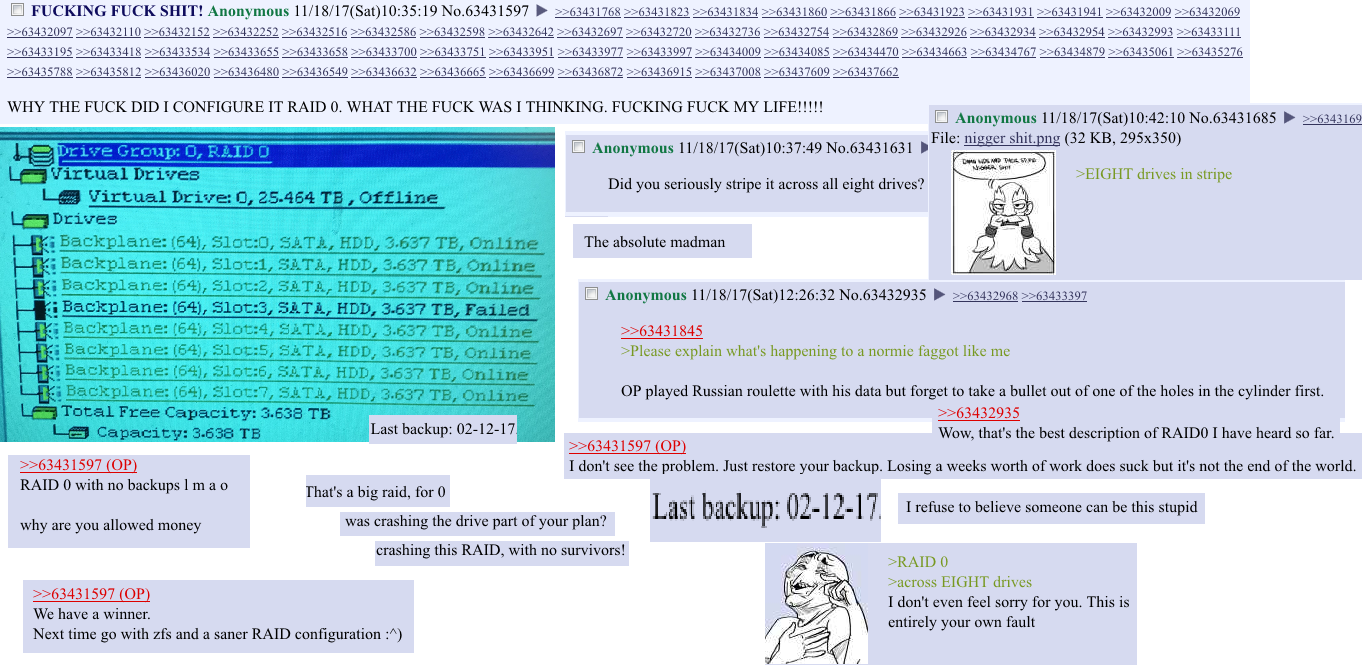

{{Note| | [[File:Raid_0.png|600px|thumb|right|Here lies anon's data. He never backed up.]] | ||

{{Note|I highly recommend you read the [[Wikipedia:RAID|wikipedia article]] before continuing. RAID is not for everyone. If you do not want to bother fiddling with RAID, or don't feel you need the uptime or redundancy, regular [[Backups|backups]] are perfectly fine.}} | |||

'''[https://www.raidisnotabackup.com REMEMBER RAID IS NOT A BACKUP!]''' RAID only protects you against individual drive failures, not accidental deletion, mother nature, or angry relatives. RAID is not a substitution for a proper backup. RAID ''can'' be used in conjunction with backups for superior uptime, reliability, performance, and data redundancy. | |||

===RAID levels=== | |||

<span style="font-size: 18px; color: red;">{{Warning|1='''USE PURE RAID 0 OR EQUIVILENTS ON CACHE DRIVES THAT REQUIRE HIGH SPEED ONLY. DO NOT USE IT ON DATA DRIVES!'''}}</span> | |||

Here is a brief overview of the most commonly used RAID levels. | |||

*'''Raid 0''': Data striped across N drives. Provides significant performance improvements, but '''if one drive fails your whole array is toast'''. | |||

*'''Raid 1''': Data mirrored across N drives. All but 1 drive can fail. Only 1/N usable storage capacity. | |||

*'''Raid 10''': 2 or more RAID 1 arrays in RAID 0. All but 1 drive can fail in each RAID 1 sub array. Only 1/N usable storage capacity. Usually each RAID 1 only has 2 disks, also called "Mirrored Pairs". | |||

*'''Raid 5''': Data striped across 3 or more drives with parity. Up to one drive can fail and the array can be rebuilt. Usable storage is (N-1) * Drive Capacity. | |||

*'''Raid 6''': Data striped across 4 or more drives with double parity. Up to two drives can fail and the array can be rebuilt. Usable storage is (N-2) * Drive Capacity. | |||

*'''Raid 50/60''': 2 or more RAID 5/6 arrays in raid 0. | |||

Most Software RAID follow these basic concepts but with a different approach and more capabilities than traditional hardware RAID. (Snapraid and unRAID use dedicated parity disks as opposed to distributed parity like RAID 5/6, but otherwise act like RAID 5/6 in that an array can lose "N" drives and still be rebuilt.) | |||

RAID 5 (and equivalents) are generally considered to not be good enough anymore. Given the rise in hard drive capacities and the increasingly long rebuild times that come with them, another drive can easily fail during a rebuild and the whole array will be lost. While it's OK on small arrays of 4 disks or less, I wouldn't risk using it on larger arrays where multiple failures is much more likely. | |||

Read The article on [[Wikipedia:Standard_RAID_levels|RAID levels]] for a more in depth explanation on these concepts. | |||

=== RAID 6 vs RAID 10 === | |||

'''Raid 10''' | |||

*+ faster data transfer rates | |||

*+ has much faster rebuild times since there is no parity to calculate | |||

*- Less reliable data security. One disk failure is guaranteed, more if each failure happens in a different mirrored pair but it's all up to chance at that point | |||

*- Less storage efficiency. Uses 50% of available storage | |||

'''Raid 6 (60)''' | |||

*+ Guaranteed protection against 2 drive failures (two per array in raid 60) | |||

*+ Better storage efficiency | |||

*- Slower data transfer rates | |||

*- Slower rebuild times due to parity calculations | |||

If you want performance and aren't too worried about drive failures or storage efficiency, chose RAID 10. If you want storage efficiency and don't care much for speed, chose RAID 6 (or 60 if you have a ''really'' large array). For even more drive fail protection, ZFS RAIDZ 3 provides up to 3 drive failures per array. | |||

=== Software RAID vs Hardware RAID === | |||

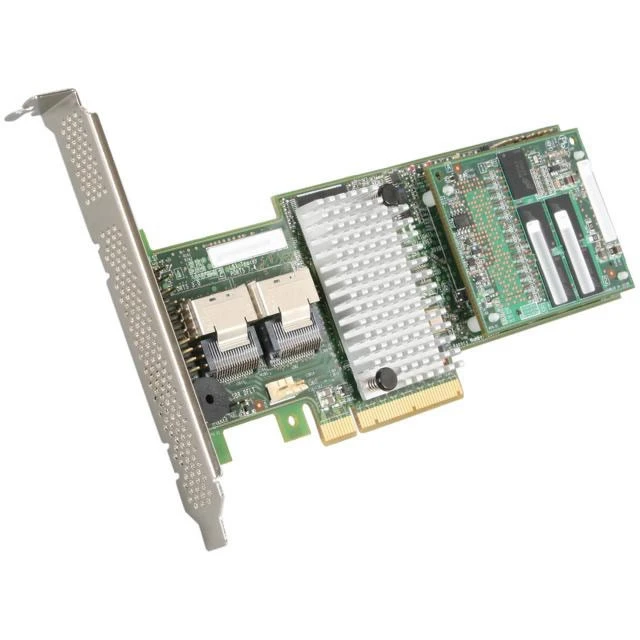

[[File:LSI_MegaRAID.png|400px|thumb|right|An example of a RAID controller card. Remember to flash the firmware if you plan on using it with software RAID.]] | |||

Traditionally, RAID has been implemented using physical hardware RAID controllers, often called Hardware RAID or "HardRAID". These controllers are often quite pricey and add an upfront cost to purchasing or building a server. Nowadays, there are RAID implementations in software, also called "SoftRAID" which can run without the need for dedicated hardware controllers. Software RAID is a lot more flexible as it is not limited to specs or hardware requirements of the controller card, it will work the same on any system. Modern softRAID solutions also come with a number of different features like checksums for data integrity, support for triple or even sextuple parity, support for differently sized disks, and more. | |||

See below for more on each individual RAID solution. | |||

== RAID Options == | |||

=== mdadm === | === mdadm === | ||

| Line 11: | Line 57: | ||

{{Note|There are a lot of misconceptions about ZFS and ECC Ram. ECC Ram is '''NOT''' required for ZFS to operate. ZFS was made to protect data against degradation however, and not using ECC Ram to protect against memory errors (and thus data degradation) defeats the purpose of ZFS.}} | {{Note|There are a lot of misconceptions about ZFS and ECC Ram. ECC Ram is '''NOT''' required for ZFS to operate. ZFS was made to protect data against degradation however, and not using ECC Ram to protect against memory errors (and thus data degradation) defeats the purpose of ZFS.}} | ||

==== | ====ZFS Concepts==== | ||

Adaptive Replacement Cache (ARC) | Adaptive Replacement Cache (ARC) | ||

RAM BEING USED AS ARC CANNOT BE USED BY RUNNING PROCESSES! | |||

Physical Disks are grouped into Virtual devices (Vdevs). | Physical Disks are grouped into Virtual devices (Vdevs). | ||

| Line 25: | Line 74: | ||

There are 7 types of vdevs. | There are 7 types of vdevs. | ||

# '''Disk''': A single storage device. Adding multiple drives to the same pool without RAIDZ or Mirror is effectively Raid 0. | # '''Disk''': A single storage device. Adding multiple drives to the same pool without RAIDZ or Mirror is effectively Raid 0 (Not recommended). | ||

# '''Mirror''': Same as a RAID 1 Mirror. Adding multiple mirrored vdevs is effectively Raid 10. | # '''Mirror''': Same as a RAID 1 Mirror. Adding multiple mirrored vdevs is effectively Raid 10. | ||

# '''RAIDZ''': Parity based RAID similar to RAID 5. RAIDZ1, RAIDZ2, RAIDZ3 with Single, double, and triple parity respectively. | # '''RAIDZ''': Parity based RAID similar to RAID 5. RAIDZ1, RAIDZ2, RAIDZ3 with Single, double, and triple parity respectively. | ||

# '''Hot Spare''': A hot spare, or standby drive that will replace a failed disk until it is replaced with a new one. | # '''Hot Spare''': A hot spare, or standby drive that will replace a failed disk until it is replaced with a new one. | ||

# '''File''': A pre-allocated file. | # '''File''': A pre-allocated file. | ||

# '''Cache''': A cache device (typically SSD) for L2ARC. It's generally not recommend to use this unless you absolutely need it. | # '''Cache''': A cache device (typically SSD) for L2ARC. It's generally not recommend to use this unless you absolutely need it. | ||

# '''Log''': Dedicated ZFS Intent Log (ZIL) device, also called a SLOG (Separate intent LOG SSD). Usually these are high performance, | # '''Log''': Dedicated ZFS Intent Log (ZIL) device, also called a SLOG (Separate intent LOG SSD). Usually these are durable, high performance, SLC or MLC SSDs | ||

{{Tip|When setting up your drives in ZFS, instead of using the raw disks, make fixed size partitions on each, a little smaller than your drive's full capacity and use that instead. | {{Tip|When setting up your drives in ZFS, instead of using the raw disks, make fixed size partitions on each, a little smaller than your drive's full capacity and use that instead. | ||

Not all disks have exactly the same sector count. Later down the line if you buy a new drive to replace a failed disk that's a different model or manufacturer, even if it's the correct capacity it may have less sectors and [http://www.freebsddiary.org/zfs-with-gpart.php ZFS will not let you use it.]}} | Not all disks have exactly the same sector count. Later down the line if you buy a new drive to replace a failed disk that's a different model or manufacturer, even if it's the correct capacity it may have less sectors and [http://www.freebsddiary.org/zfs-with-gpart.php ZFS will not let you use it.]}} | ||

==== Features ==== | |||

*Copy on Write. | |||

*Drive pooling | |||

*Snapshots and Clones. | |||

*Checksums for data integrity. | |||

*Automatic File Self Healing. | |||

*Compression (uses Significant CPU resources) | |||

*Deduplication (uses Significant CPU resources and Lots of RAM) | |||

==== Requirements ==== | ==== Requirements ==== | ||

All those | All those features come with requirements. | ||

As mentioned above, it is *highly* recommended to use ECC ram with ZFS. This means you should NOT use an SBC, consoomer computer, or shitty NAS like QNAP or synology | As mentioned above, it is *highly* recommended to use ECC ram with ZFS. This means you should NOT use an SBC, consoomer computer, or shitty NAS like QNAP or synology. This is a major limitation of ZFS, but you don’t necessarily need a 4U monster rack mount to get it done. Workstation-grade xeons that can be had for cheap (used) on eBay with ECC ram that work great. They look like a normal desktop tower and are silent. Even something like a i3 9300 on a server motherboard will be enough to run most of the features (would not recommend compression and Dedup). | ||

Your drives need to be exposed to the operating system DIRECTLY, that means if you have a hardware raid card, it has to be set to “IT MODE” or “JBOD” (just a bunch of disks). | Your drives need to be exposed to the operating system DIRECTLY, that means if you have a hardware raid card, it has to be set to “IT MODE” or “JBOD” (just a bunch of disks). Not all raid cards support this, so research before you buy. If you are planning on running ZFS in a virtualized environment you need to pass your SAS HBA card to the VM to allow it to interact with the disks. If de-duplication is very important to you, you will need a lot of ram - 1GB per TB is the rule of thumb tossed around a lot. If you do not need de-duplication, like most people don’t, the ram requirements are reduced. | ||

| Line 54: | Line 118: | ||

There are however, some downsides to ZFS RAIDZ. Notably inflexibility and the upfront cost. ZFS RAIDZ vdevs '''[https://louwrentius.com/the-hidden-cost-of-using-zfs-for-your-home-nas.html CANNOT BE EXPANDED]''' after being created. Parity cannot be added either (you cannot change a RAIDZ1 to a RAIDZ2 later on). You cannot use differently sized disks or disks with data already on them (even disks formatted as ZFS). In other words, you need to buy ''ALL'' of the drives you plan on using in your RAIDZ array '''at the same time''', because unlike other software RAID (or even hardware RAID), you won't be able to change it later. This inherently requires you to pre-plan your expansion. It is best to budget your hard drive money and save for the major sales and buy multiple shuckable external hard drives at once. This means you get maximum TB/$ spent and all the benefits of ZFS RAIDZ. | There are however, some downsides to ZFS RAIDZ. Notably inflexibility and the upfront cost. ZFS RAIDZ vdevs '''[https://louwrentius.com/the-hidden-cost-of-using-zfs-for-your-home-nas.html CANNOT BE EXPANDED]''' after being created. Parity cannot be added either (you cannot change a RAIDZ1 to a RAIDZ2 later on). You cannot use differently sized disks or disks with data already on them (even disks formatted as ZFS). In other words, you need to buy ''ALL'' of the drives you plan on using in your RAIDZ array '''at the same time''', because unlike other software RAID (or even hardware RAID), you won't be able to change it later. This inherently requires you to pre-plan your expansion. It is best to budget your hard drive money and save for the major sales and buy multiple shuckable external hard drives at once. This means you get maximum TB/$ spent and all the benefits of ZFS RAIDZ. | ||

Note that the above limitations and pre-planning are only required if you want to have raid-like features. | Note that the above limitations and pre-planning are only required if you want to have raid-like features. It is perfectly fine to use ZFS on a single drive and using snapshots + ZFS send/receive makes block-level perfect backups to a second drive (that can be different sized) while using minimal space, protect from ransomeware, and are faster than any rsync or a cp copy. So when asking yourself "Should I use ZFS RAIDZ?” you really should be asking "Do I really need RAID” (Is uptime and availability in case of drive failure very important to me?) and "Can I budget/plan my purchases around buying multiple drives at once?”. If the answer to both of those questions is "Yes", then you can and should use ZFS RAIDZ. If no, but you still want uptime, use something else like Snapraid or mdadm. If no, but you still want all the features of ZFS and meet the requirements listed above, then use ZFS on single drives. | ||

====General Recommendations==== | |||

'''Not Recommended:''' | '''Not Recommended:''' | ||

| Line 65: | Line 131: | ||

*Use "Mutt" pools (Zpools with differently sized vdevs). | *Use "Mutt" pools (Zpools with differently sized vdevs). | ||

*Growing your Zpool by replacing disks. Backup your data elsewhere, create a new pool, and transfer the data to the new pool. Much faster. (You could theoretically use a USB drive dock provided your array is 5 disks or less). | *Growing your Zpool by replacing disks. Backup your data elsewhere, create a new pool, and transfer the data to the new pool. Much faster. (You could theoretically use a USB drive dock provided your array is 5 disks or less). | ||

'''<span style="color: red;>DO NOT</span>''' | '''<span style="color: red;>DO NOT</span>''' | ||

| Line 102: | Line 167: | ||

===NTFS=== | ===NTFS=== | ||

If you are using | If you are using Snapraid as your raid solution, using NTFS formatted drives is perfectly fine. With Snapraid you are usually pulling out random drives you have lying around, which are most likely to be NTFS formatted. Otherwise, we do not recommend using NTFS unless you are running a Windows server for some reason. It does not have the same level of support on Linux and UNIX based systems as ext4 and XFS. | ||

unRAID '''does not''' support NTFS. If you are using unRAID you will need to use ext4 or XFS. | unRAID '''does not''' support NTFS. If you are using unRAID you will need to use ext4 or XFS. | ||

== Pooling together your storage devices == | |||

[[File:Pooling_drives.png|350px|thumb|right|Graphic overview of drive pooling options. Note: Only LVM and ZFS are mutually exclusive. You can use mergerfs on top of ZFS or filesystems formatted on LVM LVs.]] | |||

Pooling drives together into logical volumes saves you the hassle of having to spread your data across many physical drives, especially as your individual directories outgrow your physical disks. Instead, you can add your drives to a single logical volume that acts just like a normal volume does only it can be much bigger than any single disk, and more flexible too. ZFS already does this inherently with Zpools, but there are other options available to pool multiple different drives together. | |||

===LVM=== | |||

{{Warning|Using LVM without any form of redundancy is not recommended. LVM distributes data across all member drives. This is effectively a RAID 0, losing a disk will result in significant data loss.}} | |||

===mergerfs=== | |||

mergerfs is a "Union file system". Rather than pooling together partitions or physical hard drives, mergerFS works with file systems. It pools together multiple file systems under one mount point and merges any directories within. If you have a directory on a 2tb disk named "movies" and another directory on a 4tb disk also named "movies", mergerfs will take both directories and their contents and logically merge them together under the a new mount point. You can then share the merged directory, make changes, or pretty much anything else. Anything you can do with a normal directory you can do with the new merged directory. Mergerfs handles which disks to send files to as well. If your 2tb disk is full, any files sent to the merged directory will be sent to the 4tb disk. Mergerfs is also file system independent. You can use disks even if they have different file systems formatted on them. It supports multiple different file systems, including ext4, xfs, NTFS, APFS, ZFS, btrfs, and much more. | |||

The main benefit of mergerfs is that you can use drives that have already been partitioned and formatted with file systems which have data already on them unlike LVM and ZFS. Also unlike LVM, mergerfs does not stripe data across each drive. This means that if a drive fails, only the data on that individual drive is lost. | |||

Most use Snapraid along with mergerfs for redundancy as they both allow disks with preexisting data, though you can use it on top of ZFS or mdadm if you really feel like it. | |||

*[https://github.com/trapexit/mergerfs Official Github] | |||

*[https://www.teknophiles.com/2018/02/19/disk-pooling-in-linux-with-mergerfs/ How to Guide] | |||

== Distributed Filesystems == | == Distributed Filesystems == | ||

| Line 127: | Line 211: | ||

*[[Home server]] | *[[Home server]] | ||

*[[Storage devices]] | *[[Storage devices]] | ||

[[Category:Hardware]] [[Category:Guide]] | |||

Latest revision as of 11:25, 2 January 2023

Basic Concepts

REMEMBER RAID IS NOT A BACKUP! RAID only protects you against individual drive failures, not accidental deletion, mother nature, or angry relatives. RAID is not a substitution for a proper backup. RAID can be used in conjunction with backups for superior uptime, reliability, performance, and data redundancy.

RAID levels

Here is a brief overview of the most commonly used RAID levels.

- Raid 0: Data striped across N drives. Provides significant performance improvements, but if one drive fails your whole array is toast.

- Raid 1: Data mirrored across N drives. All but 1 drive can fail. Only 1/N usable storage capacity.

- Raid 10: 2 or more RAID 1 arrays in RAID 0. All but 1 drive can fail in each RAID 1 sub array. Only 1/N usable storage capacity. Usually each RAID 1 only has 2 disks, also called "Mirrored Pairs".

- Raid 5: Data striped across 3 or more drives with parity. Up to one drive can fail and the array can be rebuilt. Usable storage is (N-1) * Drive Capacity.

- Raid 6: Data striped across 4 or more drives with double parity. Up to two drives can fail and the array can be rebuilt. Usable storage is (N-2) * Drive Capacity.

- Raid 50/60: 2 or more RAID 5/6 arrays in raid 0.

Most Software RAID follow these basic concepts but with a different approach and more capabilities than traditional hardware RAID. (Snapraid and unRAID use dedicated parity disks as opposed to distributed parity like RAID 5/6, but otherwise act like RAID 5/6 in that an array can lose "N" drives and still be rebuilt.)

RAID 5 (and equivalents) are generally considered to not be good enough anymore. Given the rise in hard drive capacities and the increasingly long rebuild times that come with them, another drive can easily fail during a rebuild and the whole array will be lost. While it's OK on small arrays of 4 disks or less, I wouldn't risk using it on larger arrays where multiple failures is much more likely.

Read The article on RAID levels for a more in depth explanation on these concepts.

RAID 6 vs RAID 10

Raid 10

- + faster data transfer rates

- + has much faster rebuild times since there is no parity to calculate

- - Less reliable data security. One disk failure is guaranteed, more if each failure happens in a different mirrored pair but it's all up to chance at that point

- - Less storage efficiency. Uses 50% of available storage

Raid 6 (60)

- + Guaranteed protection against 2 drive failures (two per array in raid 60)

- + Better storage efficiency

- - Slower data transfer rates

- - Slower rebuild times due to parity calculations

If you want performance and aren't too worried about drive failures or storage efficiency, chose RAID 10. If you want storage efficiency and don't care much for speed, chose RAID 6 (or 60 if you have a really large array). For even more drive fail protection, ZFS RAIDZ 3 provides up to 3 drive failures per array.

Software RAID vs Hardware RAID

Traditionally, RAID has been implemented using physical hardware RAID controllers, often called Hardware RAID or "HardRAID". These controllers are often quite pricey and add an upfront cost to purchasing or building a server. Nowadays, there are RAID implementations in software, also called "SoftRAID" which can run without the need for dedicated hardware controllers. Software RAID is a lot more flexible as it is not limited to specs or hardware requirements of the controller card, it will work the same on any system. Modern softRAID solutions also come with a number of different features like checksums for data integrity, support for triple or even sextuple parity, support for differently sized disks, and more.

See below for more on each individual RAID solution.

RAID Options

mdadm

ZFS

ZFS Concepts

Adaptive Replacement Cache (ARC)

RAM BEING USED AS ARC CANNOT BE USED BY RUNNING PROCESSES!

Physical Disks are grouped into Virtual devices (Vdevs).

Vdevs are grouped into Zpools.

Datasets reside in Zpools.

The actual file system portion of ZFS is a dataset which sits on top of the ZPool. This is where you store all of your data. There are also Zvols which are the equivalent of block devices (or LVM LVs). You can format these with other file systems like XFS, or use them as block storage, but for the most part we will be using just the standard ZFS file system. There are also Snapshots and Clones which we will talk about later.

Zpools stripe data across all included vdevs.

There are 7 types of vdevs.

- Disk: A single storage device. Adding multiple drives to the same pool without RAIDZ or Mirror is effectively Raid 0 (Not recommended).

- Mirror: Same as a RAID 1 Mirror. Adding multiple mirrored vdevs is effectively Raid 10.

- RAIDZ: Parity based RAID similar to RAID 5. RAIDZ1, RAIDZ2, RAIDZ3 with Single, double, and triple parity respectively.

- Hot Spare: A hot spare, or standby drive that will replace a failed disk until it is replaced with a new one.

- File: A pre-allocated file.

- Cache: A cache device (typically SSD) for L2ARC. It's generally not recommend to use this unless you absolutely need it.

- Log: Dedicated ZFS Intent Log (ZIL) device, also called a SLOG (Separate intent LOG SSD). Usually these are durable, high performance, SLC or MLC SSDs

Features

- Copy on Write.

- Drive pooling

- Snapshots and Clones.

- Checksums for data integrity.

- Automatic File Self Healing.

- Compression (uses Significant CPU resources)

- Deduplication (uses Significant CPU resources and Lots of RAM)

Requirements

All those features come with requirements.

As mentioned above, it is *highly* recommended to use ECC ram with ZFS. This means you should NOT use an SBC, consoomer computer, or shitty NAS like QNAP or synology. This is a major limitation of ZFS, but you don’t necessarily need a 4U monster rack mount to get it done. Workstation-grade xeons that can be had for cheap (used) on eBay with ECC ram that work great. They look like a normal desktop tower and are silent. Even something like a i3 9300 on a server motherboard will be enough to run most of the features (would not recommend compression and Dedup).

Your drives need to be exposed to the operating system DIRECTLY, that means if you have a hardware raid card, it has to be set to “IT MODE” or “JBOD” (just a bunch of disks). Not all raid cards support this, so research before you buy. If you are planning on running ZFS in a virtualized environment you need to pass your SAS HBA card to the VM to allow it to interact with the disks. If de-duplication is very important to you, you will need a lot of ram - 1GB per TB is the rule of thumb tossed around a lot. If you do not need de-duplication, like most people don’t, the ram requirements are reduced.

Should I use ZFS?

ZFS has a lot of really great features that make a a superb file system. It has file system level checksums for data integrity, file self healing which can correct silent disk errors, copy on write, incremental snapshots and rollback, file deduplication, encryption, and more.

There are however, some downsides to ZFS RAIDZ. Notably inflexibility and the upfront cost. ZFS RAIDZ vdevs CANNOT BE EXPANDED after being created. Parity cannot be added either (you cannot change a RAIDZ1 to a RAIDZ2 later on). You cannot use differently sized disks or disks with data already on them (even disks formatted as ZFS). In other words, you need to buy ALL of the drives you plan on using in your RAIDZ array at the same time, because unlike other software RAID (or even hardware RAID), you won't be able to change it later. This inherently requires you to pre-plan your expansion. It is best to budget your hard drive money and save for the major sales and buy multiple shuckable external hard drives at once. This means you get maximum TB/$ spent and all the benefits of ZFS RAIDZ.

Note that the above limitations and pre-planning are only required if you want to have raid-like features. It is perfectly fine to use ZFS on a single drive and using snapshots + ZFS send/receive makes block-level perfect backups to a second drive (that can be different sized) while using minimal space, protect from ransomeware, and are faster than any rsync or a cp copy. So when asking yourself "Should I use ZFS RAIDZ?” you really should be asking "Do I really need RAID” (Is uptime and availability in case of drive failure very important to me?) and "Can I budget/plan my purchases around buying multiple drives at once?”. If the answer to both of those questions is "Yes", then you can and should use ZFS RAIDZ. If no, but you still want uptime, use something else like Snapraid or mdadm. If no, but you still want all the features of ZFS and meet the requirements listed above, then use ZFS on single drives.

General Recommendations

Not Recommended:

- Running ZFS on ancient hardware.

- Running ZFS on consoomer motherboards.

- Run ZFS without ECC Ram. If you can afford ZFS you can afford to get ECC Ram. No excuses.

- Run ZFS on underqualified hardware (shitty little NAS boxes, SBCs, etc).

- Use "Mutt" pools (Zpools with differently sized vdevs).

- Growing your Zpool by replacing disks. Backup your data elsewhere, create a new pool, and transfer the data to the new pool. Much faster. (You could theoretically use a USB drive dock provided your array is 5 disks or less).

DO NOT

- Run ZFS on top of Hardware RAID.

- Run ZFS on top of other soft RAID.

- Run ZFS in a VM without taking the proper precautions.

- Run ZFS with SMR drives.

DO

- Run ZFS if you have ECC ram and a sandy-bridge or newer processor

- Run ZFS if you care about having the best and most robust set of features in any file system

- Use snapshots (see syncoid, snapoid, and other handy tools to schedule and manage snapshots)

- Use ZFS send/receive for backups

- Sleep soundly with a smile on your face knowing that you have the most based filesystem in the world

Btrfs

Snapraid

Hardware RAID

If you bought an old used server with a RAID controller already installed, or perhaps you don't feel like messing with software RAID solutions, you have the option of using hardware RAID rather than software RAID.

Choosing a file system

XFS

ext4

NTFS

If you are using Snapraid as your raid solution, using NTFS formatted drives is perfectly fine. With Snapraid you are usually pulling out random drives you have lying around, which are most likely to be NTFS formatted. Otherwise, we do not recommend using NTFS unless you are running a Windows server for some reason. It does not have the same level of support on Linux and UNIX based systems as ext4 and XFS.

unRAID does not support NTFS. If you are using unRAID you will need to use ext4 or XFS.

Pooling together your storage devices

Pooling drives together into logical volumes saves you the hassle of having to spread your data across many physical drives, especially as your individual directories outgrow your physical disks. Instead, you can add your drives to a single logical volume that acts just like a normal volume does only it can be much bigger than any single disk, and more flexible too. ZFS already does this inherently with Zpools, but there are other options available to pool multiple different drives together.

LVM

mergerfs

mergerfs is a "Union file system". Rather than pooling together partitions or physical hard drives, mergerFS works with file systems. It pools together multiple file systems under one mount point and merges any directories within. If you have a directory on a 2tb disk named "movies" and another directory on a 4tb disk also named "movies", mergerfs will take both directories and their contents and logically merge them together under the a new mount point. You can then share the merged directory, make changes, or pretty much anything else. Anything you can do with a normal directory you can do with the new merged directory. Mergerfs handles which disks to send files to as well. If your 2tb disk is full, any files sent to the merged directory will be sent to the 4tb disk. Mergerfs is also file system independent. You can use disks even if they have different file systems formatted on them. It supports multiple different file systems, including ext4, xfs, NTFS, APFS, ZFS, btrfs, and much more.

The main benefit of mergerfs is that you can use drives that have already been partitioned and formatted with file systems which have data already on them unlike LVM and ZFS. Also unlike LVM, mergerfs does not stripe data across each drive. This means that if a drive fails, only the data on that individual drive is lost.

Most use Snapraid along with mergerfs for redundancy as they both allow disks with preexisting data, though you can use it on top of ZFS or mdadm if you really feel like it.