hello friends! new(ish)!

Home server

Home servers are about learning and expanding your horizons. De-botnet your life. Learn something new. Serving applications to yourself, your family, and your frens feels good. Put your /g/ skills to good use for yourself and those close to you. Store their data with proper availability redundancy and backups and serve it back to them with a /comfy/ easy to use interface.

Most people get started with NAS. It’s nice to have a /comfy/ home for all your data. Streaming your movies/shows around the house and to friends. Know all about NAS? Learn virtualization. Spin up some VMs. Learn networking by setting up a pfSense box and configuring some VLANs. There's always more to learn and chances to grow. Think you’re god tier already? Setup openstack and report back to /hsg/.

Things that are online today might not be online forever. It's good to have a copy of something because you never know when it might get taken down due to copyright strikes or Big Tech censorship.

/hsg/ OP Pasta

Find below the standard pasta because the /hng/ tranny keeps fucking it up, feel free to edit this with alternatives and new links as time goes on

/hsg/ - Home Server General

READ THE WIKI! & help by contributing: https://wiki.installgentoo.com/wiki/Home_server

>NAS Case Guide. Feel free to add to it: https://wiki.installgentoo.com/wiki/Home_server/Case_guide

/hsg/ is about learning and expanding your horizons. Know all about NAS? Learn virtualisation. Spun up some VMs? Learn about networking by standing up a OPNsense/PFsense box and configuring some VLANs. There's always more to learn and chances to grow. Think you’re god-tier already? Setup OpenStack and report back.

>What software should I run? Install Gentoo. Or whatever flavour of *nix is best for the job or most comfy for you. Jellyfin/Plex to replace Netflix, Nextcloud to replace Googlel, ampache/Navidrome to replace spotify, the list goes on. Look at the awesome self-hosted list and ask.

>Why should I have a home server? /hsg/ is about learning and expanding your horizons. De-botnet your life. Learn something new. Serving applications to yourself, your family, and your frens feels good. Put your /g/ skills to good use for yourself and those close to you. Store their data with proper availability redundancy and backups and serve it back to them with a /comfy/ easy to use interface.

>Links & resources

Server tips: https://anonbin.io/?1759c178f98f6135#CzLuPx4s2P7zuExQBVv5XeDkzQSDeVkZMWVhuecemeN6

https://github.com/Kickball/awesome-selfhosted

https://old.reddit.com/r/datahoarder

https://www.labgopher.com

https://www.reddit.com/r/homelab/wiki/index

https://wiki.debian.org/FreedomBox/Features

List of ARM-based SBCs: https://docs.google.com/spreadsheets/d/1PGaVu0sPBEy5GgLM8N-CvHB2FESdlfBOdQKqLziJLhQ

Low-power x86 systems: https://docs.google.com/spreadsheets/d/1yl414kIy9MhaM0-VrpCqjcsnfofo95M1smRTuKN6e-E

Cheap disks - https://shucks.top/ & https://diskprices.com/

Old thread>>

Hardware

What hardware you get depends mostly on your use case. A simple file server can be run on an SBC with a couple hard drives attached. If you want to do more fancy things like virtualization, streaming 4K movies, etc you are going to want better hardware. If you plan on using ZFS or Btrfs, server grade hardware and ECC RAM are recommended but not required.

Server options

There are many roads to the Home Server. Each one has upsides and downsides. It's up to you to decide what works best for your requirements.

SBC and NUC

For simple home server use, such as a file server or single user direct play PLEX server, these options might be an appealing and inexpensive and energy efficient option for your home server. Expect performance issues if you try to scale though, and don't expect to be able to run multiple virtual machines or do heavy transcoding, you don't have many options for expansion and little to no options for upgrading either. Forget a hardware RAID card or having any SATA ports at all. You likely won't be able to add much more ram, and definitely won't be able to increase processing power unless you go the clustering route and purchase multiple units. If your use case is compute intensive or would require expansion cards (like a GPU for example) SBCs are likely not a good option.

If you decide to go with an ARM-based board be aware that some software will not work because it is only available for x86-based CPUs. The reason is usually that the software is proprietary and was only ever released for x86. Among ARM-based SBCs the Raspberry Pi has by far the best software support as it has the largest userbase.

Connecting hard drives via USB docks may have some performance impact. Use USB 3 where possible and don't attach too many drives to a single port.

- Raspberry Pi

- Rpi4 recommended - Better Ethernet and more powerful than the 3b.

- Odroid

- Odroid N2+ recommended

- Ondroid HC4 storage server - Cheap two drive system

- Odroid HC2 is an option - if you don't mind dipping your toes into distributed systems.

- NanoPi

- NanoPi M4V2 More expensive than a Rpi4 but more powerful hardware with the option to install a x4 SATA HAT.

- Intel NUC

- Search your NUC here for more information on it

NUCs have significantly more power than a SBC, and are exclusively Intel-based. They run the gamut from small celerons to some of Intel's most powerful mobile chipsets. Very feature-rich, most all of them will include quicksync features for trascooming, and have some amount of expansion capabilities (adding/changing ram, additional SSD in some cases). NUCs will also be significantly more expensive than the above listed SBCs.

Repurpose Old Hardware

If you have an old gaming PC, workstation, laptop, or spare parts lying around, you might be able to get away with using them as your server (provided they are not too old). Performance and capability will vary wildly from machine to machine. When in doubt post specs in /hsg/ and ask.

Laptops are not really designed for 24/7 use, but their battery does act as a built in UPS to a certain extent. Preferably use one with USB3 or better, older laptops might only have USB2 ports which will bottleneck any attached HDDs.

If you already own the hardware, this option is free, which can be very appealing and a great way to get started. Keep in mind that a lot of old PCs are very power hungry (for example with Pentium 4 CPUs). In some countries this means that your power bill for this machine could be more expensive than the cost of some new cheap SBCs and their power bills combined.

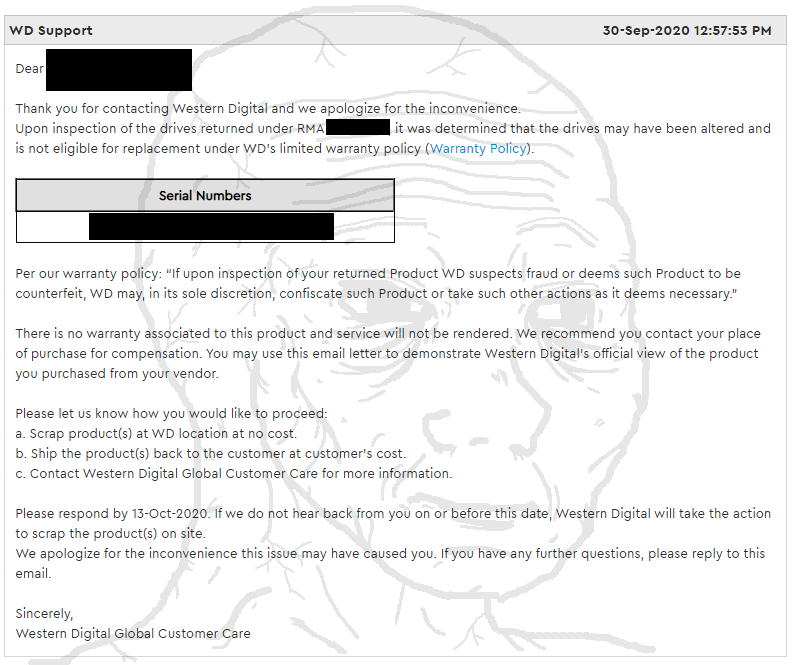

Build Your Own

If you have the money, buying new hardware is a viable (but expensive) option. Knowing exactly what you are getting and peace of mind that you can RMA any DOA items, as well as reasonable shipping prices are good reasons to buy new. A combination of new hardware and repurposed older hardware is also an option if you are on a bit of a budget.

If you are looking to build a ZFS/FreeNas server be sure to get a motherboard and CPU that support ECC RAM. Server motherboards are recommended as they have many features such as IPMI, Intel NICs, NIC teaming support, and more.

Supermicro/Asrock Rack are good options. "Prosumer" boards are usually incredibly expensive and not worth the money.

Modern AMD Ryzen CPUs all "unofficially" support ECC RAM, just make sure your motherboard supports it. Pretty much all AMD CPUs also support virtualization.

If using Intel CPUs check ark.intel.com for information on your CPU. Some features to look for:

- Intel Quick Sync Video allows for hardware accelerated transcoding.

- VT-x and VT-d are must haves if you plan on using virtualization of any kind.

- ECC RAM Support

Buy Used

Buying Used enterprise hardware can be a cheap, but somewhat unreliable option. Waiting for a good deal might not be for everyone but the rewards are great. Tremendous amounts of storage potential at a relatively cheap price. Some rackmount servers will even come with drives preinstalled.

Be aware though, rackmount servers are usually pretty loud, and many older Xeons can be extremely energy inefficient. For most popular brands/configurations there are YouTube videos specifically for the sound. Search for "R720 noise" in YouTube and you will find videos of people putting their microphones up to them so you can assess the noise levels for yourself and your application. Don't let the noise deter you unless you plan to have this server in a living space in your home. Even if you do, anything 2U or 4U usually has the option for a "quiet mod" where you swap the fans with noctua's or similar, drastically reducing the noise the machines create.

Things to look out for when buying used:

- 32bit systems have a hard limit on Ram. Avoid at all costs.

- Some older legacy systems do not support UEFI and thus cannot boot UEFI OS's.

- Older hardware specs may become performance bottlenecks (Earlier SATA/PCIe/SAS/USB revisions).

- Some Very old (8-10 years) high end CPUs actually perform worse than modern low-to-mid end CPUs.

- Lack of support for potentially desired features such as QSV, 1GB/10GB Ethernet, m.2, etc.

- Some disk shelf/server backplanes are SAS only and won't accept standard Sata drives.

Good places to find old server hardware:

Case mods

Prebuilt NAS

Only buy a prebuilt NAS if you want to spend more and get less.

They are typically woefully underpowered for the price and you’re better served with a $65 Odroid than a $300 QNAP/Synology with a shitty Celeron and 1gb of ram. That said, they are the most noob friendly option with a GUI interface for setup.

Storage

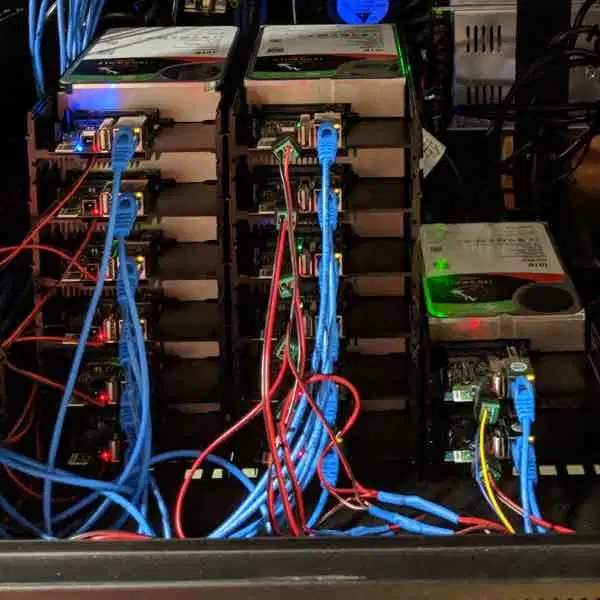

Shucking

It's massively cheaper to buy WD Easystores or WD Elements (when they go on sale) than it is to buy an equivalent size NAS hard drive like WD Red/Iron wolf. Just remember: YOU VOID YOUR WARRANTY. If your drive fails you are most likely fucked. When you buy regular NAS drives you are basically paying more for the warranty.

Some other things to consider if you decide to shuck:

- Shucked drives under 8TB might be SMR drives.

- Shucked drives lack the middle mounting hole that most other drives have. You may need an adaptor for your HDD trays if your case doesn’t support them. Some cases might not have adaptors at all, research before buying your case!

- Some 8 and 10TB drives are air-filled rather than filled with helium. These air-filled drives can run significantly hotter than the helium ones. Check the model number with Crystal Disk Info before shucking, if it has an H it is most likely a helium drive. If you have airflow constraints in your case, it might be better to try and get Helium drives, otherwise it shouldn't be much of an issue.

3.3v pin issue

Shucked drives WILL NOT BOOT with most consumer power supplies. This is because of a feature on enterprise drives that lets administrators reboot hard drives by powering the 3.3 volt pin which isn’t used on consumer hard drives. Consumer PSUs, of course, always power this pin, so the hard drive will be stuck in an infinite boot loop and never power on. This can be solved by covering the first three pins on the hard drive with insulating Kapton tape.

- DO NOT use liquid electrical tape. This can damage the drive.

- DO NOT cut the SATA power cable this can damage the drive and your PSU.

- Molex to SATA adapters DO work but be careful, as some of the poorly made ones can catch fire. I wouldn’t risk it.

SMR v CMR

SMR stands for "shingled magnetic recording" It's an alternative method to conventional magnetic recording (CMR) that traditional hard drives use. While SMR technology allows for greater data density, they are also slow compared to CMR. These drives are bad for NAS use cases and especially bad for ZFS due to compatibility issues. Just avoid them all together. All Seagate NAS drives are CMR. Easystores/Elements 8tb and above should be safe.

SSD

SSDs are recommended for the OS and programs only, or for use in cache, or L2ARC cache/SLOG in ZFS.

Don't buy SSDs for main storage unless you want to spend tens of thousands. Check out the SSD buying guide for more on SSDs. If you do have a large SSD array, post the details in /hsg/ so we can all drool.

Expanding Your Storage

If you find you have run out of SATA ports on your motherboard but require more storage, there are a number of options for increasing the number of drives your server can support. The best and recommended approach is to use a SAS HBA with SAS to Sata breakout cables. Each SAS port can support up to 4 Sata drives (or even more if you use an expander). You can find used LSI SAS HBAs on eBay for relatively cheap which have ~2 internal ports, or 8 total SATA drives. Avoid SAS1 cards as they are far too old by now and have some limitations. If your case can no longer support more drives, you may want to look into buying an External SAS HBA, which will allow you to connect drives in an external enclosure directly to your server.

- Some videos on SAS controllers and cables which I found very helpful. If you are new to using SAS you should watch these:

Sata HBAs and port multipliers/Expanders are not recommended. They are garbage and not worth buying. SATA port multipliers specifically can cause issues when you try to us any kind of RAID with them.

There are some counterfeit LSI cards on the market, avoid Chinese sellers, sellers with no return policy, etc.

Racks and Cases

- Home server case guide

- Lack rack - Meme-y but practical and cheap solution for rack-mount equipment. Be wary of putting too much weight on them though

Operating systems

There are many options for which OS to use for your server, Ultimately it depends on your needs and budget.

- Standard Linux like Ubuntu supports software RAID and file systems like OpenZFS, and runs on pretty much anything.

- OMV is good enough if all you are storing is rarely accessed media and is GUI based if you prefer that over a command line.

- TrueNAS CORE (formerly FreeNAS) is BSD based and fairly simple to install and use but server grade hardware and ECC RAM is recommended.

- Proxmox and Vmware ESXi if you want your server to be primarily about virtualization. If this is your only server, this may increase the difficulty in creating ZFS or raid pools. Not impossible, just more tricky.

See Home server/Choosing an Operating System for more information.

Linux

These are all server-specific or at least minimal operating systems without a desktop environment or other bloat preinstalled.

- Debian Stable is one of the best operating systems to use for a server. It is not too hard to manage, but at the same time customizable enough for your server's purposes. Has plenty of documentation.

- Ubuntu Server is based on Debian Testing. Slighty less stable than Debian, but has far less outdated software in its repos. Recent LTS releases have focused on providing heavy integration with Openstack. Does retarded things with packages and versions (lib*-ubuntu1.l2). Arguably the best option for users new and old.

- Template:Strike CentOS is kil. RIP

- Alpine Linux is an extremely lightweight hardened distro using musl and busybox instead of glibc and coreutils. Uses OpenRC instead of SystemD. Commonly used as base for docker images thanks to its small size, but works well on bare metal too. Recommended, especially for more experienced users.

- Gentoo is usually too much trouble to be worth it, but it works and sees occasional server usage.

- Arch and other rolling release distros are not good choices as they are generally unstable and often break/change behaviour on updates.

YunoHost=

Debian-based. Pre-configured with a web interface (accessible through its local gateway) and an app catalog for server software. A great choice for beginners.

If you're lost, just go with YunoHost or Ubuntu. Use mdadm, ZFS on Linux (ZoL) or Snapraid for data redundancy.

Open Media Vault

Good for storing infrequently changed files like media files.

Like FreeNAS/TrueNAS, OMV is primarily a GUI tool, but it is Debian based and a command line is always a ssh away. All configuring of typical NAS thing is available to you in a GUI (samba/NFS/shares/user management/etc). OMV does not force ZFS on you however, like TrueNAS does. OMV is ideal if you want a GUI on a SBC or less powerful hardware.

Supports Snapraid as a plugin. Can be used with mergerFS to pool drives together.

- OMV Forums

- OMV Extras - Needed for mergerFS

- Installation guide

unRAID

Comes with it's own RAID solution that technically isn't real RAID because all parity is stored on one or two disks. Not free, you need to fork over some money to buy it.

Supports differently sized physical disks and adding hard drives to expand as needed.

Unraid 6.8.3-6.9.2

SHA256: 18F75CA34A39632DC07270510E453243753CFF302F3D5ADD4FA8813D4ADB304D

magnet:?xt=urn:btih:180782e4ff3e00b7efc8a0529239b896e0557f72&dn=unraid692.7z

BSD

All are highly regarded by their users.

TrueNAS CORE

TrueNAS CORE is the free version of the premium TrueNAS and successor/replacement for FreeNAS. TNC is a FreeBSD based OS that utilizes ZFS for storage and has many available plugins for things like PLEX, bitorrent, and more. Has simple, easy to use GUIs to set up your services such as samba shares, etc.

Keep in mind it will install to the ENTIRE DRIVE and you won't be able to use the install drive for anything else. A small, cheap, M.2 SSD is a good option for the OS drive. Server grade hardware and ECC RAM is recommended.

Hypervisor

You may want to consider using an OS designed for virtualization/containerization. Virtualization allows you to run multiple independent operating systems on the same hardware simultaneously. You can use this for home lab, or game servers, or even virtualize your desktop instead of using a big tower.

Containers add the ability to isolate processes to make a more stable server, and also allow you to migrate services from one server to another on the fly.

Proxmox

A Free Linux based Virtualization Environment that has built in ZFS support. Utilizes KVM, QEMU for virtual machines and LXC for containers.

Also Supports Ceph and GlusterFS for distributed storage and clustering.

Good alternative to Vmware, but is lacking in some areas. Good enough for most people's needs.

ECC RAM is recommended as per usual with ZFS.

VMWare ESXi

If you've ever worked in a datacenter on managed IT for big business you will be familiar with VMWare ESXi, it's the most popular, feature rich hypervisor available. Unfortunately, it is not free, and only has a limited free tier with 8 core per VM limit. No vSphere, or most vStorage options like vMotion and distributed switching. For most people this is okay, but if you are a home-server enthusiast you might want to play around with all the features they have available. It's an excellent option unless you don't like to use proprietary software or don't want to go through the trouble cracking to get all of the features on the latest version.

If you use version 6.5 or 6.7 you can use this key to unlock all these features.

- vCenter: 0A0FF-403EN-RZ848-ZH3QH-2A73P

- vSphere: JV425-4h100-vzhh8-q23np-3a9pp

Vmware 7.0 has dropped support form westmere-EP/gulftown (x5xxx) CPU's. If your system has these old CPU's you should consider upgrading to something later than Sandybridge if you want to use the latest version of ESXi.

SmartOS

SmartOS is not Linux, nor is it Solaris (but it is Illumos underneath). It's a type 1 hypervisor platform that is/was the core of Joyent's public cloud platform (has since been sold off to MNX who are claiming continued support for opensource involvement).

Similar to TrueNAS, ZFS is not an opt in feature, and unlike most operating systems it does not require a installation disk, the system is entirely ephemeral running from a USB stick which can be pulled out at anytime. All of your VMs and other persistent data is kept on whatever zpool you name "zones", you are free to add and remove other pools and have all of the ZFS features available in the gz ("global zone") which is the base environment you are given to manage the system. What you can't do in the gz is install most packages, setup additional users, make any persistent changes to config files or run services.

Instead everything you do happens under zones, these are very similar the BSD jails or docker containers, the main difference is they are a first class kernel feature and have exceptional security and efficiency properties. As they are running bare metal with sandboxed zfs datasets it's possible to host samba and NFS shares of the same filesystem the VMs are stored on, in fact there's no reason you couldn't run multiple samba zones with access to separate areas of storage, this means even with a rootkit installed on one of your samba servers the other would remain completely isolated.

If you don't care for zones you can also setup full on HVM instances using either KVM or Bhyve, the later being far more performant and able to run the most recent Windows versions. VNC video consoles and serial ports are automatically setup whenever you boot a VM for remote management.

The gz comes with 3 cli tools for doing day to day tasks: vmadm, imgadm and zlogin. Running man followed by one of these commands will get you some very decent documentation, so l3rn to read.

Suggested reading:

- Bryan Cantrill sperging out about containers

- How to delegate datasets for use as a file server and Creating said file server zone

- Contact information everyone on IRC is very helpful so don't be a cunt

SBC Operating Systems

If you are using an SBC or NUC for your server, these are potential options to use over standard Linux.

FreedomBox

Runs on virtually any SBC.

Setup is incredibly simple. Installing new software and services can be done with the click of a button.

Lets you easily share files, host websites, sync files, and more. The number of available applications are a bit limited however.

YunoHost

Debian-based. Like FreedomBox, incredibly simple.

File Systems and RAID

You may want to consider a RAID array for long-term file storage. A proper RAID array can protect you against sudden drive failures, and some software RAID have more features to combat data degradation.

When deciding on what RAID level to use, try to aim for at least two disk redundancy for arrays larger than 4 disks. Rebuilding a RAID array is an intensive process and it's not uncommon for a second disk to fail during the process. RAID 5 and equivalents only offer 1 disk redundancy, so if another disk fails during your rebuild you're fucked and any data not backed up is lost.

For more information and guides on how to setup your storage see Home Server/Setting up your Storage

Software RAID vs Hardware RAID

Software RAID typically has a number of features that are more beneficial than just standard RAID. Best in class at this moment is ZFS, which has automatic file self healing and file system level checksums to combat bitrot.

Btrfs is also good if you want an alternative to ZFS, but is still in development so be careful.

UnRAID is more noob friendly (but you pay for it).

Snapraid + merger FS is a viable (free) alternative to UnRAID if you don't feel like spending money on your OS.

ZFS

A long standing, reliable file system and software RAID solution that works on BSD and Linux.

Supports up to 3 disk redundancy (RAIDZ3) along with your regular equivalent traditional RAID levels of 0,1,5,6,10 albeit with different names, and has checksums and scheduled scrubbing to prevent data corruption. Remember and configure this if not enabled already.

Has some limitations, one major one being expansion is cumbersome so consider planning out your pool well in advance, if you are slowly adding 1 drive with various capacities over the years, go with BTRFS.

If you plan on using RAIDZ make sure you know what your array is going to be beforehand, you won't be able to add to it later.(for now, raid expansion is in the works)

ZFS Recommends 8GB of memory as a minimum(can be as low as 2GB but you should consider BTRFS if you have this amount of ram), however, if you want better performance you should add as much as you can. Your memory serves as a cache > the bigger the cache > the more cached data you can store > the better the performance. 1GB per formatted TB is a good middle-ground before you start to see diminishing returns. Also to note, ECC memory is recommended for obvious reasons, and normally isn't much of a premium over regular RAM. it's not mandatory but use it at your own risk. see this paper for more information about why you should run ECC

mdadm

A tool for creating and managing Linux software RAID arrays.

You can create file systems directly on the RAID arrays, and then use mergerFS to pool the file systems together.

Technically supports disks of different sizes, but it requires multiple partitions on the drives and is not recommended.

No built in checksums, Can use dm-integrity to detect errors, but has no way of dealing with them.

LVM

Required learning for management of drives on Proxmox.

A bit more confusing than partitioning drives normally but is very flexible. Allows for thin provisioning of storage, and pooling of multiple drives or mdadm arrays into logical volumes

XFS

Another reliable file system. Unlike ZFS it doesn't have built in software RAID features.

ext4

Default file system for most Linux distros. Does everything a good file system should do and more.

Btrfs

It's "B-Tree", not "Butter".

Has many of the same features as ZFS, including checksums and self healing.

STILL UNDER DEVELOPMENT USE AT OWN RISK. Potential for data loss.

RAID 1 features are stable on the most recent Linux kernels.

mergerFS

A Union file system that pools multiple file systems together under one mount point, allowing them to appear as one.

Has some advantages over LVM, you can use multiple disks with data already on them instead of having to create LVM volumes/groups. If a disk fails, since the data is not striped across multiple disks (like with LVM), data loss can be less drastic.

Works with multiple different file systems at the same time, including Windows's NTFS. Use with Snapraid or mdadm for disk redundancy.

Available as a plugin for OMV.

Snapraid

Has an impressive list of features including up to 6 disk redundancy and the ability to add hard drives to expand as needed.

Supports differently sized disks, allowing for more flexibility with expansion (your data disks must be equal to or smaller than your parity disks! If you try to add a new data disk that is larger than your parity drives you will run into issues).

Technically not "real" RAID and has some limitations. Read the manual.

Can be used with mergerFS to pool drives together while retaining a level of redundancy.

Available as a plugin for OMV.

Containers

Containers are a method of isolating running software from both the host OS and other software. You may also hear them called Jails or Chroot Jails if you are running some variant of BSD (such as TrueNAS CORE/FreeNAS).

There are a number of reasons why you would want this:

- Less overhead than standard virtual machines because you aren't virtualizing the kernel.

- Isolated software cannot interfere with each other or the host. If a container crashes it won't effect anything else.

- Like VMs, containers are portable. You can create a container, configure it however you want, and deploy it anywhere.

- Like VMs, removing containers and starting from scratch or a backup in the event of a fatal crash is easy.

- Docker and Podman containers are incredibly easy to deploy and you can find pre-configured container images online.

Best practice is to keep the base OS as clean as possible and install each individual application (such as PLEX, Samba, etc) in their own container. This makes your server much more stable since there is virtually no chance of a containerized application crashing your server, or an installation gone wrong from ruining your host OS.

LXC and LXD

LXC is the standard Linux containers. Available on most distros. You will likely be using these if you are running a Proxmox server. Since Linux containers are essentially just separate instances of Linux, you can't run Windows programs in them without using WINE.

LXD is a newer, more user friendly version of LXC. Has better management options for containers.

Docker

Instead of running as though it was an entire OS like LXC, Docker only virtualizes a single application. Can run on Windows as well as Linux. You will still need WINE to run Windows apps on Linux. Freemium software. Base software is free for individuals (you).

Podman

An alternative to Docker. Those using Docker can easily switch without issues. Unlike Docker, it does not use a single large server daemon. Uses "pods" which can contain more than one container.

Jails

Jails are BSD's version of containers. Since TrueNAS CORE/FreeNAS is FreeBSD based you will be using these instead of LXC/LXD.

- TrueNas Jail documentation

- Give Jails access to host storage - Jail version of Bind mounting

- FreeBSD Jail documentation

Server software

For a greater range of self hosting solutions and services see awesome self hosted software.

System administration software

For a greater range of sysadmin solutions and services see awesome sysadmin software.

Security

Unlike a desktop, a server is always working, accepts connections from the internet (your desktop is normally firewalled and doesn't have any ports open) and is easy to discover (especially if you send mail from it). It's under a bit more risk, and its worth thinking about what intrusions you will try to prevent and how. Refer to Security#Threat_analysis to understand how and what threats you can mitigate.

Basic measures include:

- Privilege separation

- If you are behind a router, only forward ports you need

- Your firewall should reject all traffic which isn't either in response to an existing connection, or destined for a forwarded port

- Make sure to keep your router firmware updated, as vulnerabilities are often patched in newer versions (at least, from the companies which bother even releasing them). If your device doesn't receive support in the form of firmware updates and security fixes, consider running community-maintained firmware such as OpenWRT

- Regularly update software and kernels when they become available for your distro (it is far better to fix what updates break then get owned)

External Links

- Home server hardware - Hayden James' home lab setup

- STH Forums - Good general resource for server questions

- Learn Command line

- HP T620 plus - Decent cheap computer. You can use it as a VPN, pfSense firewall, and more:

- Tiny Certificate Authority For Your Homelab